Artificial Intelligence, abbreviated as AI, is the use of computers to simulate human intelligence. It encompasses everything that allows computers to learn how to solve problems and make intelligent decisions. AI systems copy the human thought process that experts follow when they observe, interpret and evaluate information before making a decision. To do this, a supercomputer is required that can process vast quantities of data using Machine Learning (ML) algorithms to search for patterns and insights, from which future predictions can be made. AI algorithms contrast with traditional computer programs, as they are not explicitly programmed by a human programmer, and can continuously learn and improve as they are fed new data.

It’s very likely that you have already experienced artificial intelligence in your daily life. If you have you ever asked Amazon Alexa to play your favorite music, or Google Assistant what the weather will be like today, or even Apple’s Siri to tell you a joke, you’ve already interacted with an AI system.

History of Artificial Intelligence

The term ‘artificial intelligence’ was coined by John McCarthy, an American computer and cognitive scientist in a paper published in 1956. However, it’s conception was in 1950 when Alan Turing asked the question, ‘Can a computer communicate in a way indistinguishable from a human?’. To discover the answer to this question the Turing Test was developed to test a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. The Turing test is considered by many to be the idea that led to the development of chatbots and conversational AI.

Key AI milestones in recent years include:

- 1997 – Supercomputer Deep Blue, developed by IBM beats world chess champion Gary Kasparov

- 2008 – launch of Google Voice Recognition App on the new iPhone (the first step towards Apple’s Siri, Google Assistant and Amazon Alexa)

- 2011 – IBM Watson defeats the best contestants on the US TV show Jeopardy

- 2013 – Tomáš Mikolov, a Czech computer scientist, lays the foundations for the Natural Language Processing algorithm, Word2Vec, arguably the most important application of machine learning in text analysis and chatbot applications

- 2014 – Finally in 2014, 64 years after the Turing Test was conceived, a chatbot called Eugene Goostman, passes the Turing Test

6 Key Artificial Intelligence Concepts

Knowledge about AI is not only for data scientists and software engineers. It will touch every part of our lives, and is already doing so in the world of HR in functions such as recruitment, training, onboarding, performance analysis, retention, and employee service management. Those responsible for delivering HR Services to employees can benefit by understanding AI’s core concepts in more detail so that they may better grasp how to apply and manage it. So why not start now? Below we list 6 ‘must know’ AI concepts to help you on your journey to AI fluency.

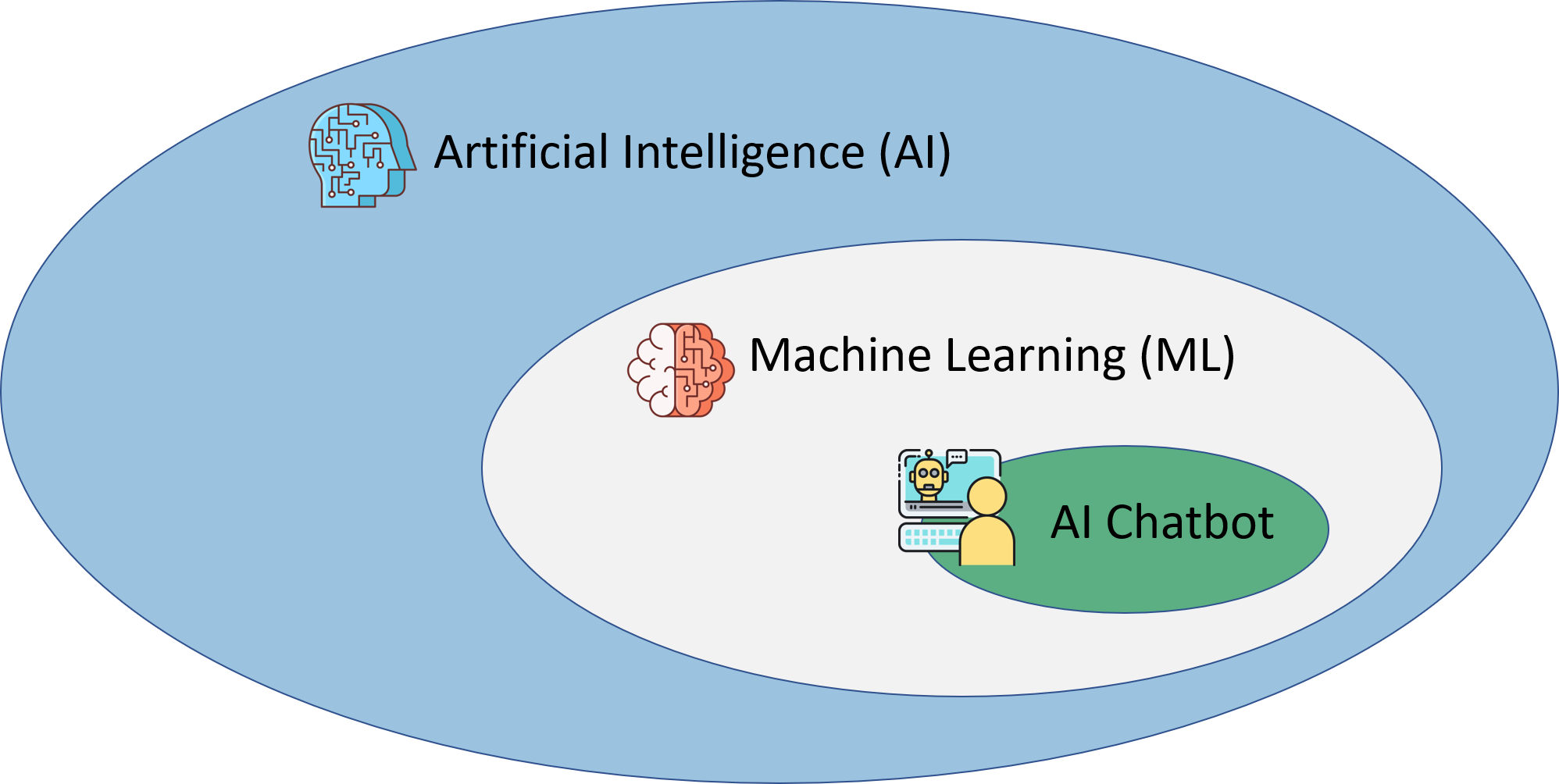

1. Machine Learning

Machine Learning (ML) is a subset of AI that uses computer algorithms to analyze data and make intelligent decisions based on what it has learned, without being explicitly programmed. Hal Daumé III, Associate Professor of Computer Science, at the University of Maryland, gives a succinct definition of ML:

“At a basic level, Machine Learning is about predicting the future based on the past.”

In order to make accurate predictions, the AI system ingests huge quantities of structured and unstructured data, known as ‘big data’, upon which it runs a Machine Learning algorithm, or layers of algorithms, known as deep neural networks (See concept 4).

The benefit of an AI Machine Learning algorithm compared to a traditional rule-based algorithm, is that it is able to continually learn (unaided by human intervention) as it is fed and processes more data.

2. Structured versus Unstructured Data

90% of the world’s data was created in the last two years, 80% of which is unstructured (IBM) and is estimated to be growing at a rate of 55-65 percent each year.

Structured data stored in a traditional column-row database, or spreadsheet like a Microsoft Excel table, is relatively easy to analyze.

On the contrary, unstructured data such as emails, blog posts, images and video files, is more difficult to analyze and not easily searchable, which is why it hasn’t been useful to organizations until recently, when developments in AI and Machine Learning algorithms were able to extract insights from it and unlock its business value.

The benefit of being able to evaluate the meaning of unstructured data is that an organization is able to act upon it and make better business decisions. For example, in the case of employee question data, HR can use a variety of sources such as call center transcripts, employee question data and chatbot conversations to spot patterns in the information, and make real-time decisions that can improve HR operational efficiency and the employee experience.

Whether the data is structured or unstructured, algorithms are applied to it to extract insights and enable AI decision making capabilities.

3. Traditional Algorithm vs AI Algorithm

“An algorithm must be seen to be believed.”

Donald Ervin Knuth, American computer scientist, mathematician, and professor emeritus at Stanford University

An algorithm solves a problem or set of problems that could not otherwise be addressed. It is a step-by-step procedure for calculations, that is designed to allow the computer to learn without the need for the intervention of a computer programmer. For example, to make yourself a drink, you have to follow a sequence of steps in the right order. If you do something in the wrong order you might end up making a mess. To illustrate, here are the instructions for making a smoothie:

- Add fruit to the blender

- Add milk to the blender

- Put the lid on the blender

- Switch the blender on

Imagine if we missed out one of the steps or reversed the order. We could end up switching on the blender with nothing in it. Or there could just be milk in the blender and no fruit.

On the other hand, a ‘blender program’ using a Machine Learning AI Algorithm, would find patterns in relevant smoothie making data without the programmer having to explicitly program any instructions. It’s like giving an AI algorithm the smoothie and asking it to work out how to best make it from what the computer knows about food and recipes.

4. Neural and Deep Neural Networks (or Deep Learning)

Machine Learning AI algorithms that learn by example from the processing of structured and unstructured data are the reason why we can have ‘to and thro’ conversations with Alexa, Siri and Google Assistant. These Apps all use neural networks to continuously learn without the need for human intervention.

Neural Networks take inspiration from biological neural networks found in the human brain. Multi-layered neural networks are called Deep Neural Networks which enable Deep Learning. Deep Learning is a specialized subset of Machine Learning that uses layered neural networks to simulate human decision making. It enables AI systems to continuously learn on the job and improve the quality and accuracy of results, and has proven to be very efficient at various tasks, including image recognition, voice recognition & transcription, facial recognition, medical imaging and language translation.

5. Natural Language Processing (NLP) and Natural Language Understanding (NLU)

“You shall know a word by the company it keeps.”

J.R. Firth, British linguist specializing in contextual theories of meaning, whose ideas are now used in Natural Language Processing techniques

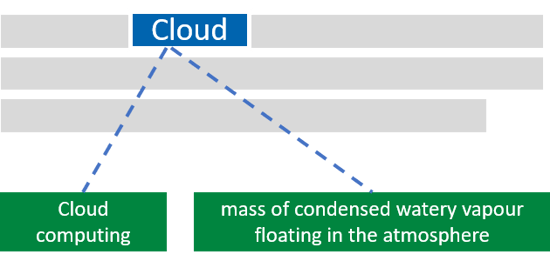

Natural Language Processing (NLP), and a subset of it called Natural Language Understanding (NLU), is a field of artificial intelligence (AI) that uses Machine Learning (ML) mathematics-based computational linguistics algorithms and deep learning neural networks to determine the semantic meaning of words, phrases and sentences. It does this by deconstructing sentences grammatically, relationally, and structurally in order to understand the context of use. For instance, based on the context of a conversation, NLP can determine if the word “cloud” is a reference to cloud computing or the mass of condensed water vapor floating in the sky.

Natural Language Processing of the word “cloud” in a sentence

NLP/NLU are the key components of conversational AI as they enable humans to communicate with a computer, for example through a chatbot, or virtual assistant interface, or voice recognition microphone. NLP success stories in the consumer world include Apple’s Siri, Amazon Alexa, and Google Assistant. These Conversational AI (CAI) capabilities are now being adopted by companies that are looking to improve the operational efficiency of HR Services and the employee’s experience of the workplace.

How Does NLP and NLU Work?

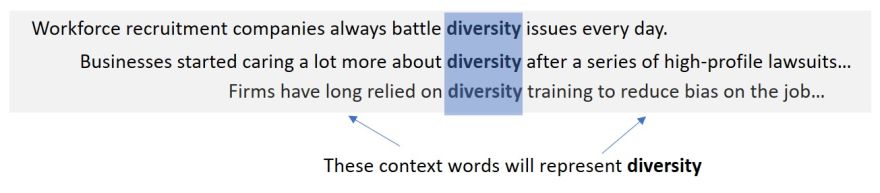

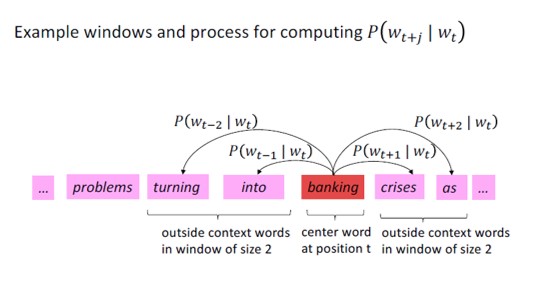

A word’s meaning is given by the words that frequently appear close-by. When a word appears in a text, its context is the set of words that appear nearby. Many contexts of a word are used to build up a representation of the word. Below are a number of contexts for the word ‘diversity’.

Statistical mathematics makes this possible. Below is a visualization of the mathematics that make modern conversational AI assistants like Amazon Alexa possible.

The Mathematics Behind Natural Language Processing – Representing words by their context (Source: CS224n: Natural Language Processing with Deep Learning, Stanford University)

Another example of NLP in action is the algorithm behind Google search results. Every time you search, there are thousands, sometimes millions, of web pages with helpful information.

Google uses a whole series of algorithms (based on NLP/NLU) to sort through and rank hundreds of billions of webpages in their Search index to find the most relevant, useful results in a fraction of a second, and present them in a way that helps you find what you’re looking for.

Natural Language Processing and Natural Language Understanding algorithms are powerful capabilities of Conversational AI chatbot technology that HR Services teams can take advantage of today.

6. Training (the AI Model)

We have seen that AI uses a range of techniques, such as Machine Learning (ML), deep learning neural networks and algorithms, to predict outcomes based on statistical probability. To arrive at accurate predictions, the AI model must be ‘trained’ on high quality data. The better the quality of data, the better the predictions that the AI system can make – AI decision making is only as good as the data the system is fed.

Training the AI system makes it possible to determine and develop the parameters of your AI model. For example, in the case of chatbots, we can use the ongoing data from the conversations with employees, to train the conversational AI chatbot to be smarter and more accurate in its responses to employees.

Training AI on Employee Questions

In order for an AI chatbot to be able to interpret an employee question, it must first be trained on ‘domain-specific’ data. To do this, the AI system must be fed employee questions and answers until it recognizes what that question means and can match it with the correct answer.

The first step is to cluster the employee questions that all ask the same thing (they all have the same ‘intent’) but in a different way. After training the AI model on these clustered questions, the AI chatbot will recognize the intent of the employee’s question, determine its meaning, and be able to respond appropriately.

This post was written by Kelly Frisby, Marketing Director of Dovetail Software on their website here. They are an exhibitor on the HRTech247 Case Management and HR Service Delivery floors. You can visit their HRTech247 exhibition stand here.